Golf Rankings Rant

This is the perfect time to do this. Illinois won its regional, so this won't be me, the biased Illini fan, screaming about how his golf team got screwed. In fact, as you'll see, these rankings being so awful (yes, awful) actually placed a 2-seed in Illinois' region that had no business being a 2-seed. For some, bad rankings inflating teams well above their talent level = an easier path to La Costa.

But the results of these six regionals give us definitive proof that the thing I've been ranting about all spring (that the new team rankings are bad bad bad) wasn't just in my head. The teams that appeared to be overrated by the new rankings are mostly the teams that flamed out in their regionals. We'll get to the numbers in a bit (oh, I have numbers) but first let me give you an overview.

Last summer, former UCLA men's coach Derek Freeman approached the NCAA with an idea. What if the whole ranking system was overhauled? The NCAA had used Golfstat for decades, not only for scoreboards but also for ranking the players and teams. Those rankings were the NCAA's RPI or NET for golf, and if you were the #12 team in the computer rankings then you got the last 2-seed at one of the six NCAA regionals.

He announced that the NCAA had chosen Spikemark back in July on Instagram:

Freeman's pitch was more-or-less this: The PGA Tour uses a 'strokes gained' (analytics) approach now, so college should do the same and ditch the old head-to-head rankings maintained by Golfstat. The NCAA agreed and dumped Golfstat, instead signing up for Freeman's yet-to-be-developed "Spikemark Golf."

The big problem: he couldn't develop it. He didn't hire the right team to build it out (and this including hiring people who had been laid off by Golfstat when Spikemark effectively put Golfstat out of business). This is a separate issue from the rankings, but it's important in telling the whole story. So I'll cover the website/scoring issues and then we'll get to the rankings issues.

In September, when the first college golf tournaments tried to use Spikemark to record live scoring, the platform crashed (to the point where college golf scores were being reported by staffers tweeting pictures of paper score cards). At first Freeman claimed that it was a cyber attack, but after a month of trying to fix it, he gave up. The rankings were going to be delayed, and Spikemark as the replacement for Golfstat was never going to happen.

Spikemark essentially folded and the rankings were moved to a company from the UK called "Clippd." The scoring? Well, the teams would have to figure out the scoring on their own. Golfstat, which had no staff, scrambled to reassemble everything. If you remember me ranting about the first tournament of the spring season being scored on "LeaderboardKing", that's because individual tournaments had to scramble and find someone (anyone) who could provide live scoring. Golfstat is back up and running now (as you saw the last few days), but I have no idea if that's permanent or if the NCAA is going to try to put this out for bid again.

That's the "how Spikemark killed Golfstat and then couldn't deliver on what they promised" side of this. But there's also the ranking side. If you follow me on Twitter or read this space you know my rants about this spring. The rankings are wrong. They're just wrong. I get that Mark Broadie is involved and that he's the Bill James of golf, but somewhere in the process of "tournaments should be weighted by strength of field", something went wrong.

I noted in my golf preview back in February that this was an issue. I linked this Brentley Romine article with quotes from coaches who were giving the new rankings some serious side-eye. And I pointed to some anomalies with Illinois that had me seriously scratching my head. Those just got worse as the season went on.

Before we get to that, I feel like I should note that Strokes Gained is an excellent way to view golf. Vegas fears it because Strokes Gained can predict which players will play well on which PGA Tour courses. Player X, who doesn't drive it particularly well, is 5th on tour in "Strokes Gained - Approach" and that's really going to help him at Harbor Town because it's not a bomber's paradise. That kind of thing. Gamblers dive deep into Strokes Gained when betting on every tournament.

It's the application of a Strokes Gained model to team golf that has me scratching my head. I completely understand how Strokes Gained works within a closed system of 200-or-so players who play on PGA Tour events. Just compare those 200 guys to each other. And I also understand how they worked out the weighting so that they can compare Korn Ferry Tour players to PGA Tour players by twisting the "strength of field" knob. There's enough overlap (PGA players playing in KFT events; KFT players getting a few events on the PGA Tour) that they can dial in the weighting based on historical performance.

The idea behind this new college ranking system was to do the same. The old system, maintained by Golfstat (if you're wondering why the Golfstat screen no longer has the rankings next to players its because they're no longer in charge of those rankings), was a head-to-head system. These teams beat those teams in these tournaments. Or, when ranking players, these players beat those players. If a golfer had beaten 347 golfers and lost to 34 across his 11 tournaments, he was going to be ranked pretty high.

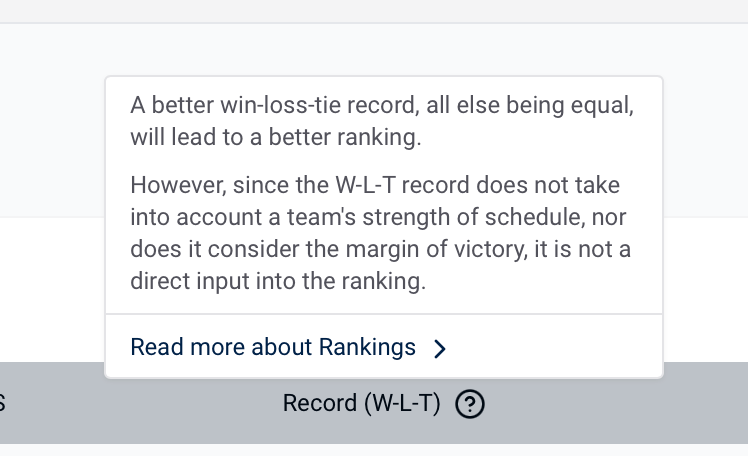

The new system doesn't do that. Or perhaps I should say that it is philosophically opposed to that. The rankings specifically point out that they don't look at head-to-head. On the rankings page at Clippd there's a little question mark next to "won-lost-tied" on the chart and when you hover over it you get this:

That little statement there, for me, answers my "why do these rankings not make any sense?" question immediately. They're not looking at W-L-T, they're looking at strength of schedule and margin of victory. That's fine. KenPom does the same. SP+ does the same. But you have to be very very very very very precise to get the weighting right. And the weighting isn't right.

It's just not right. I've been ranting about this all year. When the rankings came out on April 10th, I went on this rant on Twitter. And I kept noting all spring how teams simply didn't move in the rankings. Where you were slotted in January when they debuted is where you stayed. Here was my tweet about that three weeks ago:

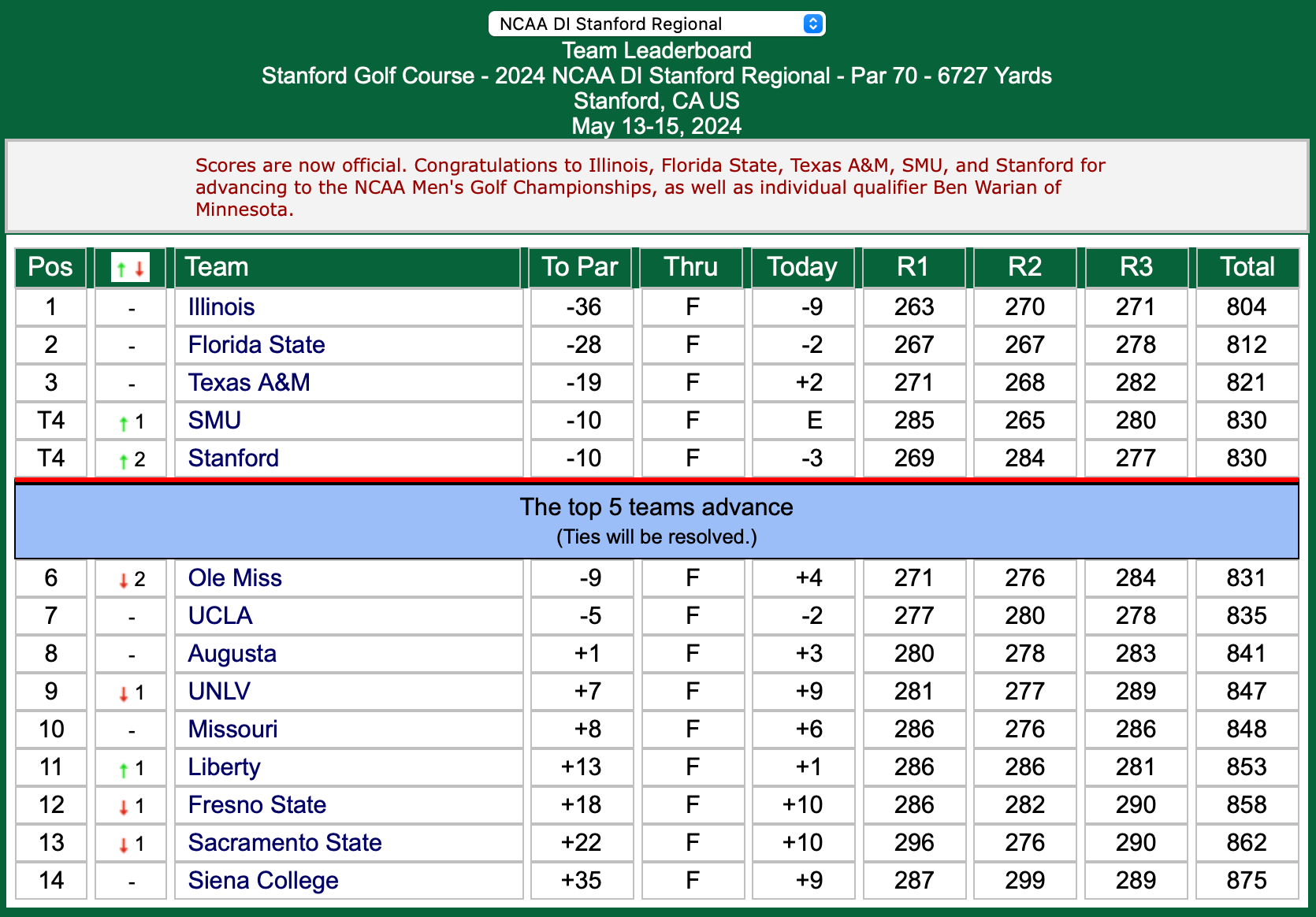

"What were you saying there, Robert? Were you saying that Ole Miss should have tumbled in the rankings and didn't deserve to be the #7 team in the country when the tournament seedings came out?" Yes. That's exactly what I'm saying. It's why this happened to Ole Miss:

Ole Miss, the #7 team in the country, not even making it to the NCAA Championships. In fact, only five of the top 10 teams made it to La Costa. And are you ready for this? It's the five top-10 teams with poor W-L-T numbers, the data the rankings page brags about eliminating.

W-L-T isn't perfect, of course. There's no weighting. If a team chooses to play an incredibly easy schedule, they'll have a great W-L-T record because they beat up on a bunch of nobodies. But with the three SEC teams and the two Pac 12 teams that failed to qualify, they all played fairly similar schedules to the rest of the top-10. Those are all coaches trying to get their teams prepared for the NCAA Championships.

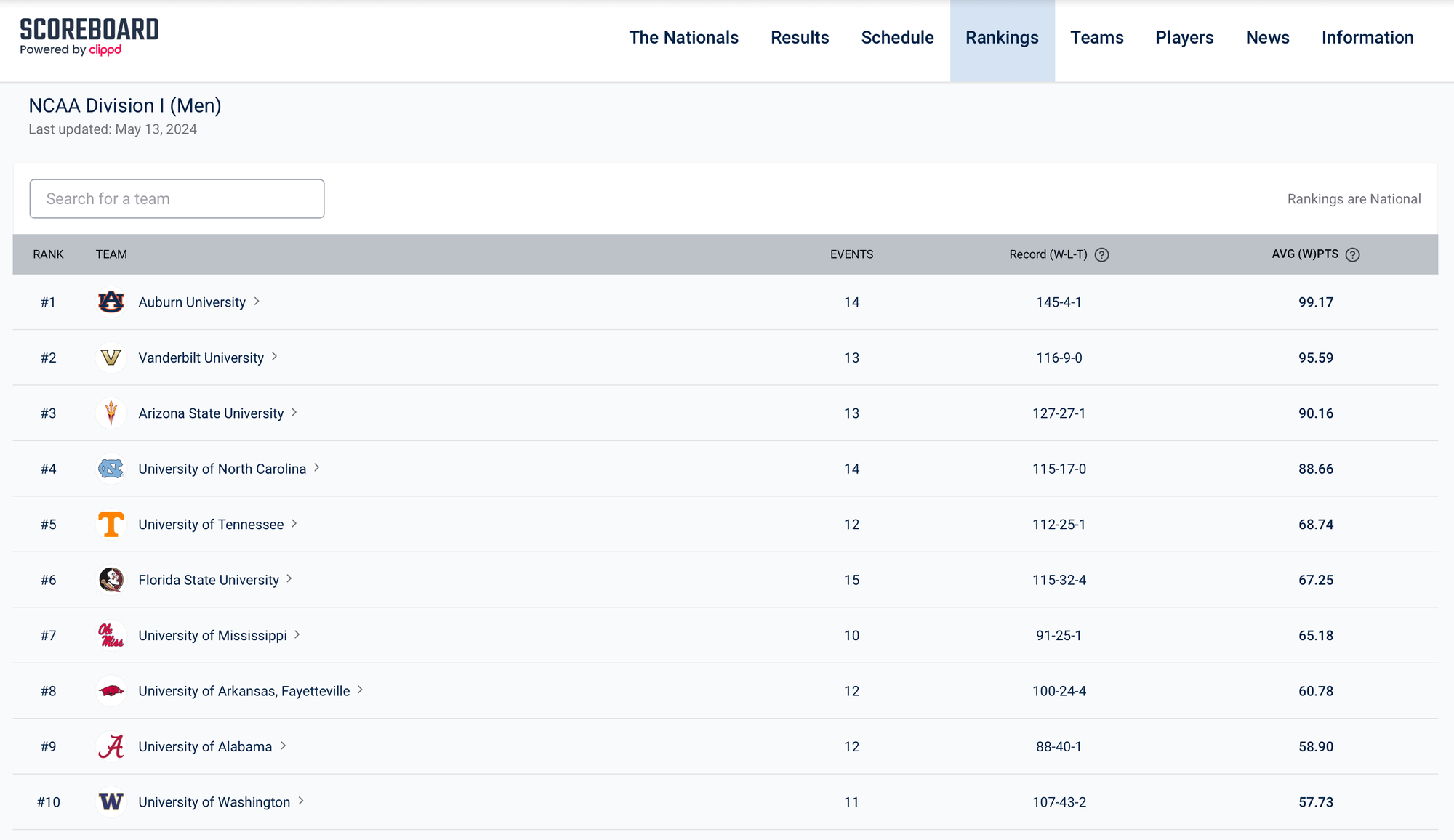

And here's the data for that top 10. First, the Clippd rankings from yesterday:

Now just look at the losses for a second. In the top four, Auburn had four (!), Vandy had nine, #4 North Carolina had 17, and Arizona State had 27. The old rankings would have said "I don't know Arizona State - are you really top-4 material?" The new rankings said "yes they are." And then Arizona State failed to qualify yesterday. Those 27 losses were a giant flashing "not really the #3 team" sign.

Better yet, just look at Alabama and Washington there at the bottom, two teams that failed to qualify yesterday. Alabama was 88-40 and they were ranked 9th. Washington was 107-43 and they were ranked 10th. Here's a list of the teams ranked 11th through 20th who had fewer losses than 40:

#12 Virginia - 24 losses

#18 Illinois - 27

#15 Florida - 32

#17 Georgia Tech - 34

#16 Oklahoma - 37

Would you believe that all of those teams qualified while Alabama and Washington did not? You would? Good. You're understanding what I'm saying here.

My point: if you went with a flat winning percentage – no statistics, no analytics, just W-L-T – you would have had a much better seeding list than these rankings. Virginia, Illinois, and Oklahoma would have been ranked in the top 10. And this is where I note that Illinois and Oklahoma won their regionals while Virginia finished 2nd to #1 Auburn.

But even that doesn't tell the full story. I need to zero in on one team to talk about why I've been saying these rankings are flawed. And that team is future Big Ten opponent Washington.

In September, Washington played a tournament at their home course. This is an advantage, of course, because they know every read on every green. And they know to avoid the left side of the fairway on 11 because from the left the green is sloping away from you. That kind of stuff.

At that tournament, Washington blew away the field. They put up a -40 and the next closest competitor was Oklahoma at -14. An impressive performance, home course advantage or no home course advantage. But it shouldn't keep them in the top ten all season long.

Because of that performance as well as two third place finishes and a fourth place finish, Washington entered the rankings at #3 when they were released in January. And their top two players, Petr Hruby and Taehoon Sung, entered the rankings as the #18 and #19 players in college golf. Right there my "I know too many college golfer names because I look at the rankings all the time" brain forced a head-tilt. A few good fall tournaments and they're 18th and 19th? And Washington is 3rd? No. Just... no.

But hey, Indiana State basketball was top-10 in the NET rankings when they debuted in December, so what do I always tell people? Long way to go, and the rankings will work it out. They'd have to keep it up all season, and teams that shouldn't be there usually don't keep it up.

Washington didn't. But they stayed in the top 10.

Washington finished 9th out of 16 teams in the Lamkin Invitational, losing to teams like Loyola Marymount and St. Mary's. They finished 15th out of 17 teams at Arizona State's Thunderbird Intercollegiate (15th! At one tournament! For a team that was supposed to be #7 in the country!). Yes, teams can have a bad tournament (I just wrote about Illinois losing to Rutgers and Michigan State at the Sea Island tournament), but here we're talking about a team that's supposed to be 7th nationally.

At the end of the regular season, Washington did technically fall out of the top-10 for a few days. They dropped to 11th. But they then finished tied for 4th at the Pac 12 Tournament and that was good enough to move them up to 10th again. There goes that Strength Of Field dial again, being cranked all the way to the right.

I mean, just look at the other rankings for someone like Taehoon Sung. I've talked about the PGA Tour University rankings before (a way for college golf seniors to get status on professional tours through their four years of success in college). That list, which is seniors only, has Sung with the 41st-best resume among all seniors. DataGolf (which I believe I've also mentioned before) has Sung as the 164th player in college golf going into these NCAA Regionals.

There is no way in hell there should then be another set of rankings – the only set of rankings that matters because it sets the NCAA Tournament seeding – that has him as the 12th best college golfer as late as February 14th and only drops him to 38th by the time regionals come around. Someone needs to only look at two numbers – 41st-best SENIOR on the PGA Tour U rankings that are used to determine Tour Cards yet 38th best senior, junior, sophomore, or freshman on this other list – and conclude that the weighting is off.

I mean, I hate to do this because he's an Illini but the best example on the entire list might be Illini walk-on Timmy Crawford. Playing as an individual at the Dayton Flyer Invitational last fall, Crawford caught fire. He won the tournament by 10 shots (and that wasn't part of the Illini team score). It's more or less a practice round because you're not participating in the team tournament. Your score doesn't count.

Because he won by 10 and because margin of victory is so heavily weighted, when the rankings debuted, Crawford was listed as the #51 player in college golf. And even though he never recaptured that performance and isn't even in the Illini lineup right now (nor is he the 6th man), he's still, to this day, labeled as the 136th-best college golfer. Here's how the Clippd rankings have the Illini players after the NCAA Regional scores were factored in:

Max Herendeen - 30th

Jackson Buchanan - 38th

Timmy Crawford - 136th

Ryan Voois - 161st

Tyler Goecke - 176th

Piercen Hunt - 348th

One tournament, playing as an individual, where he beat a kid from Illinois State by 10 shots, still has Crawford ranked higher than Cole Anderson from Florida State who just finished 10th at the Stanford Regional this weekend. And if you want some more backup to those numbers...

DataGolf ranking for Anderson: 99th

DataGolf ranking for Crawford: 400th.

Do you see what I'm saying? The weighting is 100% flawed. I don't even need to see the formula. When I go through the DataGolf numbers, they all make sense. Yes, Crawford would be somewhere around the 400th-best college golfer. Yes, Anderson should be somewhere around 100th. So to see Crawford ranked 136th and Anderson ranked 153rd? On what's supposed to be the most advanced ranking system that has been developed for college golf? Change it. It's bad.

I'll close by saying that I'm not really pointing the finger at Mark Broadie here. If you read the rankings explanation page on Clippd, you'll see a lot of this under certain line items:

This was the strong preference of the membership and the NCAA golf committees.

I'm guessing that committee put some things into place that might cause Mark Broadie to respond with "well, that's not exactly how Strokes Gained works..." My issue is simply with the weighting factors chosen. If I had one minute to speak in front of this "NCAA golf committee", I would make two simple points:

- It's obvious that the weighting for strength of schedule is off. None of these SEC teams who were playing each other and not being penalized for poor finishes because "the field was so strong" should have been seeded as high as they were. And their failures at the NCAA Regionals shows this.

- It's obvious that the factoring for scoring margin can't be modeled after the PGA Tour factoring. Yes, a 10 shot win at a PGA Tour event tells you that you have a ridiculously talented golfer. But a 10 shot win at the Dayton Flyer Invitational by a kid who isn't even part of the team competition and is just there to get reps in should not be weighted anywhere close to the same.

Again, all of this matters because these are the seeds. On Selection Wednesday for college golf, the Last Team In and the First Team Out came from these exact rankings. They just add in the automatic qualifies (conference tournament champions) and then go right down the list. Coaches keep or lose jobs based on this formula. And I guarantee that some teams got in and some teams were left out because of these faulty rankings.

Washington was maybe 34th-best college golf team. Their 8th place finish at the Rancho Santa Fe Regional was only shocking because they were ranked 10th and got the 2-seed. So many of these highly-ranked teams that didn't qualify had been showing us they weren't very good (Ole Miss losing to Auburn by 34 strokes) but the rankings refused to drop them because of it.

Wait, this was supposed to be the "I'll close with" section. I'll just say one more thing and then get out of here.

I'm not saying that the Big Ten is better than the SEC. The SEC is clearly deeper (although the differential at NCAA's is only 5 SEC teams to 3 Big Ten teams). I'm saying that the weighting is off. One final example.

The way that the weighting is applied is that you get a certain number of points for each tournament. Illinois got 115.81 weighted points for winning the Stanford Regional. Average out all of your weighted points for each tournament and that's how they rank teams top to bottom.

#18 Illinois won the Stanford Regional and qualified for NCAA's. #9 Alabama failed to qualify at the Chapel Hill Regional. Maybe that's just a bad tournament for Alabama and a good tournament for Illinois, but is there anything in the numbers that might suggest that Alabama was overrated?

Weighted points Illinois received for finishing 2nd at the Big Ten Championships: 51.25

Weighted points Alabama received for finishing 8th at the SEC Championships: 55.97

At some point, "lost to a lot of teams all season" has to matter in some form. In Alabama's last three tournaments before the regional they finished 10th out of 16 at the Valspar, finished 3rd (but 30-back) at the Mason Rudolph Championship, and then finished 8th at the SEC Championships. If you do those three things and actually climb from 10th to 9th in the rankings...

Change. The. Weighting.

Comments ()